I just helped put together a “customer satisfaction survey” for a client.

(P.S. I used Open Source survey software – Lime Survey. I use open source, free software whenever I can to save money for my clients. But I don’t put up with crap. If it’s free and good quality, then I’ll use it. If it’s free and crap quality, I won’t. It’s that simple)

Here are the 4 challenges I faced, and how I overcame them:

- Challenge #1: Generate A High Response Rate

- Challenge #2: Rewrite The Questions In A Way That Motivated The Respondents To Answer Them

- Challenge #3: Generate High Quality Responses That We Can Do Something With

- Challenge #4: Decide How To Publish The Results That Serves Our Goals For Both Current Students And Prospective Students

Challenge #1: Generate A High Response Rate

Don’t you hate it when you put blood sweat and tears into a customer survey (or any other marketing campaign at all for that matter), only to get a few responses back?

I sure do. What a waste of time.

For this customer satisfaction survey, we sent out 280 invitations and got 179 responses. That’s a response rate of 63.8%.

Do you think that’s good? I sure do.

Part of the reason for such great results might be because this group of people is particularly willing to help improve the programme.

But here are 3 more ways I helped boost that response rate:

The survey was easy, fast and short:

- Easy: Only a couple of closely related questions on each page. Every question was optional so there were no annoying pop-up boxes of errors when they tried to go to the next page.

- Fast: I used radio buttons wherever I could, so the respondents could have their say with just a click. And for the free-text fields, the size of the text-box indicated how short or long we wanted their answers to be

- Short: A progress bar at the top let them know how much long the survey was. I kept the questions to a minimum. We didn’t really need their contact details “just in case” so we didn’t burden them by asking for that information.

Plus, I used my word-smithing skills to ensure the respondents that their responses weren’t going to end up in a report that gets glanced at once and then ends up on a shelf somewhere to gather dust (subtle techniques that I won’t describe here).

Challenge #2: Rewrite The Questions In A Way That Motivated The Respondents To Answer Them

Seems obvious doesn’t it?

But have you ever been suckered into participating in a survey that doesn’t seem to care about your motivation?

Have you quit half way through in disgust (because of the time you’d wasted up to that point), or blundered through (paying little attention to the quality of your answers) simply because you didn’t want the entire session to be a complete waste?

I bet you have.

Here’s an example of how I rewrote a question to motivate the respondents to answer it:

Old Question: “Rate the following out of five: ‘The programme has helped me develop my knowledge, skills and attitudes’ 1 2 3 4 5”

New Question: “Apart from learning about Management, how else has the programme helped you? (Eg confidence, knowledge). What have you done since joining the programme that you would not have considered before? (Eg applying for that Management role). Tell us your story.”

You might be thinking “that question is huge, no one will bother to read it, let alone answer it!”.

Well, if you thought that, you’re dead wrong.

143 responded to that question (a whopping 79.9% of the respondents). The average response was 42 words (6000 words in total).

And we got a huge amount of very quotable responses and an invaluable insight into why people choose the programme.

Challenge #3: Generate High Quality Responses That We Can Do Something With

Why spend so much time and effort conducting a customer satisfaction survey and then let the responses get dusty on a shelf somewhere?

This was our opportunity to ask our customers what changes we should make to the way we operate. But first, we had to ask questions in such a way that management would know exactly what needed to be done when those results came in.

We met that challenge by providing an appropriate combination of quick response questions and long answer questions and stimulated thinking and a high level of response.

Interestingly, in previous paper based versions of this survey, the responses to the long answer questions were short. In this online version, responses were much bigger and of much higher quality. Many were 300 words+ for a single answer (that would be over a page if it was hand-written).

It was a lot for us to read, but full of very useful information.

Challenge #4: Decide How To Publish The Results That Serves Our Goals For Both Current Students And Prospective Students

How did we serve both our current students and prospective students?

We published many of the results online so that when current students come accross them, they can see that we listen carefully to what they have to say. And for prospective students, they see unedited testimonials regarding the quality of the programme.

Is Honesty Really The Best Policy?

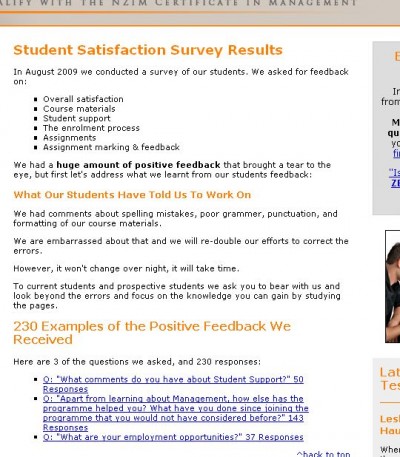

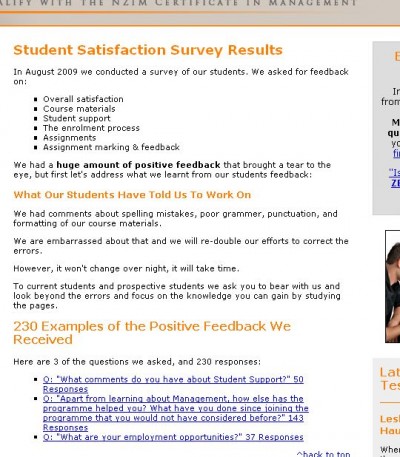

The first few paragraphs of the webpage for the survey results is shown in the screenshot below:

As you can see, we started with the bad news “what our students have told us to work on” which talked about spelling mistakes, poor grammer, punctuation and formatting in the course materials.

Why did we do this? 3 Reasons:

- This level of honesty is rare. It get’s peoples attention. Normally, when survey results as released to the public, they are edited and the unpleasant bits are either removed or watered down.

- It provides contrast to the rest of the page which is a list of 230 positive comments that students made about the programme

- It has positive effects on both target groups. Current students know that we listen to them. Prospective students see that we listen to current students and they trust us because we don’t hide the truth, and therefore they are more likely to join us as students

Talk to me, by email, or phone (07) 575 8799.

Cheers,

Sheldon Nesdale.